The Guardian reports on Ai-Da’s appearance in the House of Lords to contribute to a debate on AI technology, in an article written by Alex Hern.

AI-DA THE ROBOT SUMS UP THE FLAWED LOGIC OF LORDS DEBATE ON AI

Experts say it is the roboticists we need to hear from – and the people and jobs AI is already affecting

When it announced that “the world’s first robot artist” would be giving evidence to a parliamentary committee, the House of Lords probably hoped to shake off its sleepy reputation.

Unfortunately, when the Ai-Da robot arrived at the Palace of Westminster on Tuesday, the opposite seemed to occur. Apparently overcome by the stuffy atmosphere, the machine, which resembles a sex doll strapped to a pair of egg whisks, shut down halfway through the evidence session. As its creator, Aidan Meller, scrabbled with power sockets to restart the device, he put a pair of sunglasses on the machine. “When we reset her, she can sometimes pull quite interesting faces,” he explained.

The headlines that followed were unlikely to be what the Lords communications committee had hoped for when inviting Meller and his creation to give evidence as part of an inquiry into the future of the UK’s creative economy. But Ai-Da is part of a long line of humanoid robots who have dominated the conversation around artificial intelligence by looking the part, even if the tech that underpins them is far from cutting edge.

“The committee members and the roboticist seem to know that they are all part of a deception,” said Jack Stilgoe, a University College London academic who researches the governance of emerging technologies. “This was an evidence hearing, and all that we learned is that some people really like puppets. There was little intelligence on display – artificial or otherwise.

“If we want to learn about robots, we need to get behind the curtain, we should hear from roboticists, not robots. We need to get roboticists and computer scientists to help us understand what computers can’t do rather than being wowed by their pretences.

“There are genuinely important questions about AI and art – who really benefits? Who owns creativity? How can the providers of AI’s raw material – like Dall-E’s dataset of millions of previous artists – get the credit they deserve? Ai-Da clouds rather than helps this discussion.”

Stilgoe was not alone in bemoaning the missed opportunity. “I can only imagine Ai-Da has several purposes and many of them may be good ones,” said Sami Kaski, a professor of AI at the University of Manchester. “The unfortunate problem seems to be that the public stunt failed this time and gave the wrong impression. And if the expectations were really high, then whoever sees the demo can generalise that ‘oh, this field doesn’t work, this technology in general doesn’t work’.”

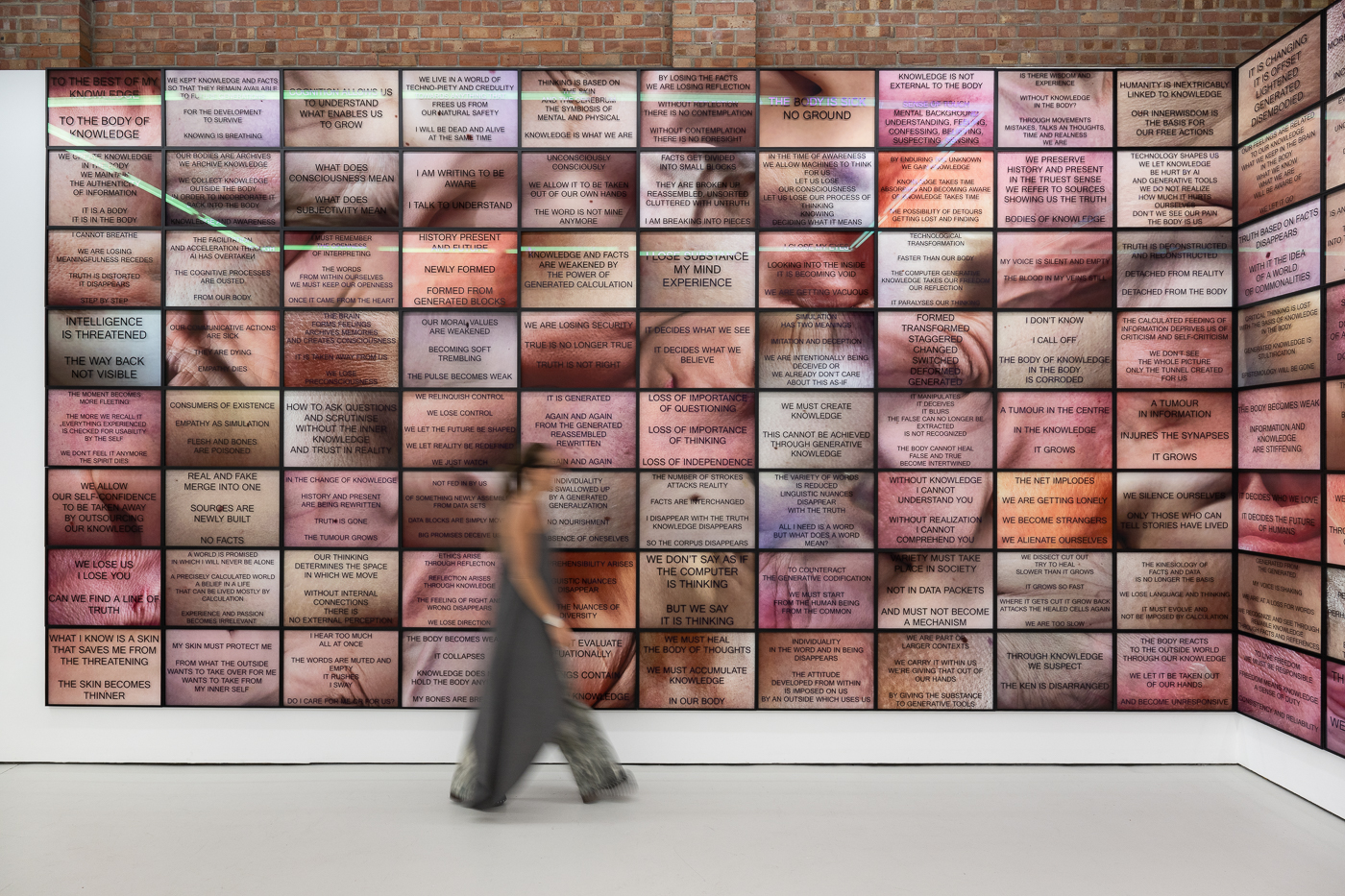

In response, Meller told the Guardian that Ai-Da “is not a deception, but a reflector of our own current human endeavours to decode and mimic the human condition. The artwork encourages us to reflect critically on these societal trends, and their ethical implications.

“Ai-Da is Duchampian, and is part of a discussion in contemporary art and follows in the footsteps of Andy Warhol, Nam June Paik, Lynn Hershman Leeson, all of whom have explored the humanoid in their art. Ai-Da can be considered within the dada tradition, which challenged the notion of ‘art’. Ai-Da in turn challenges the notion of ‘artist’. While good contemporary art can be controversial it is our overall goal that a wide-ranging and considered conversation is stimulated.”

As the peers in the Lords committee heard just before Ai-Da arrived on the scene, AI technology is already having a substantial input on the UK’s creative industries – just not in the form of humanoid robots.

“There has been a very clear advance particularly in the last couple of years,” said Andres Guadamuz, an academic at the University of Sussex. “Things that were not possible seven years ago, the capacity of the artificial intelligence is at a different level entirely. Even in the last six months, things are changing, and particularly in the creative industries.”

Guadamuz appeared alongside representatives from Equity, the performers’ union, and the Publishers Association, as all three discussed ways that recent breakthroughs in AI capability were having real effects on the ground. Equity’s Paul Fleming, for instance, raised the prospect of synthetic performances, where AI is already “directly impacting” the condition of actors. “For instance, why do you need to engage several artists to put together all the movements that go into a video game if you can wantonly data mine? And the opting out of it is highly complex, particularly for an individual.” If an AI can simply watch every performance from a given actor and create character models that move like them, that actor may never work again.

The same risks apply for other creative industries, said Dan Conway from the Publishers Association, and the UK government is making them worse. “There is a research exception in UK law … and at the moment, the legal provision would allow any of those businesses of any size located anywhere in the world to access all of my members’ data for free for the purposes of text and data mining. There is no differentiation between a large US tech firm in the US and a AI micro startup in the north of England.” The technologist Andy Baio has called the process “AI data laundering” and it is how a company such as Meta can train its video-creation AI using 10m video clips scraped for free from a stock photo site.

The Lords inquiry into the future of the creative economy will continue. No more robots, physical or otherwise, are scheduled to give evidence.