Gretchen Andrew is cited in Analytics India Magazine‘s article on AI-generated art.

Who Should Get The Credit For AI-Generated Art?

By Ram Sagar

Edmond De Belamy, a portrait generated by a machine learning (ML) algorithm was sold at Christie’s art auction for $432,500; 40 times higher than the initial estimate of $10,000. The whole event was marketed by Christie’s as ‘‘the first portrait generated by an algorithm to come up for auction’’. AI for art, especially generative adversarial networks (GANs) have come a long way since then.

Christie’s affair makes one wonder how good has AI become, but if one looks closely they might think otherwise. Be it the datasets that were fed to the algorithm or the development of the algorithm itself, the role of humans cannot be denied. So, in a world that quickly embraces art in all forms, the diminishing role of humans in AI generated art creates ambiguity with regards to credibility and responsibility.

“Anthropomorphising AI systems can undermine our ability to hold powerful individuals and groups accountable.”

Edmond De Belamy, a portrait generated by a machine learning (ML) algorithm was sold at Christie’s art auction for $432,500; 40 times higher than the initial estimate of $10,000. The whole event was marketed by Christie’s as ‘‘the first portrait generated by an algorithm to come up for auction’’. AI for art, especially generative adversarial networks (GANs) have come a long way since then.

Christie’s affair makes one wonder how good has AI become, but if one looks closely they might think otherwise. Be it the datasets that were fed to the algorithm or the development of the algorithm itself, the role of humans cannot be denied. So, in a world that quickly embraces art in all forms, the diminishing role of humans in AI generated art creates ambiguity with regards to credibility and responsibility.

To address this, a group of researchers from MIT and other top universities in Europe collaborated and have produced their findings in a recently published work.

“This portrait is not the product of a human mind. It was created by artificial intelligence,” said one of the campaigns in the run-up to the Christie’s auction. The researchers find this rhetoric troublesome. “Anthropomorphising AI systems can undermine our ability to hold powerful individuals and groups accountable for their technologically-mediated actions,” wrote the researchers citing one of the previous works.

For instance, who will take the blame of a malicious deepfake generated by the neural network? Is it the algorithm developer for open sourcing something without comprehending the collateral or is it the algorithm itself?

Key Findings Of The Study

Previous works make convincing arguments for why AI systems ought not to be credited with authorship. This work focuses on a different question — how does the public assign credit to an AI involved in making art?

The researchers used a series of vignette studies to explore the relationship between rhetoric around AI and the levels of responsibility assigned to various actors in an AI system.

The researchers consider credit and responsibility in the broad sense of public perception by focusing on peoples’ intuitions in the vignettes. In addition to looking at the attributions of responsibility to the AI system itself, this study also considered various involved human actors.

One of the findings is that the extent to which people perceive the AI system as an agent is correlated with the extent to which they allocate responsibility to it.

As plotted above, the researchers found that participants who anthropomorphised the AI more than the median assigned more responsibility to the crowd and technologist, when compared with those who anthropomorphised the AI less than the median.

“Anthropomorphising the AI system mitigates the responsibility to the artist, while bolstering the responsibility of the technologist.”

The study states that one of the participants even wanted to credit Robbie Barrat, the programmer who created the Github repository, for his contribution to Belamy. Allocation of responsibility to individuals in the creation of AI art will be dependent on the choice of language used. Therefore, it is important for artists, computer scientists, and the media at large to be aware of the power of their words, and for the public to be discerning in the narratives they consume.

Avoiding Moral Crumple Zones In The Future

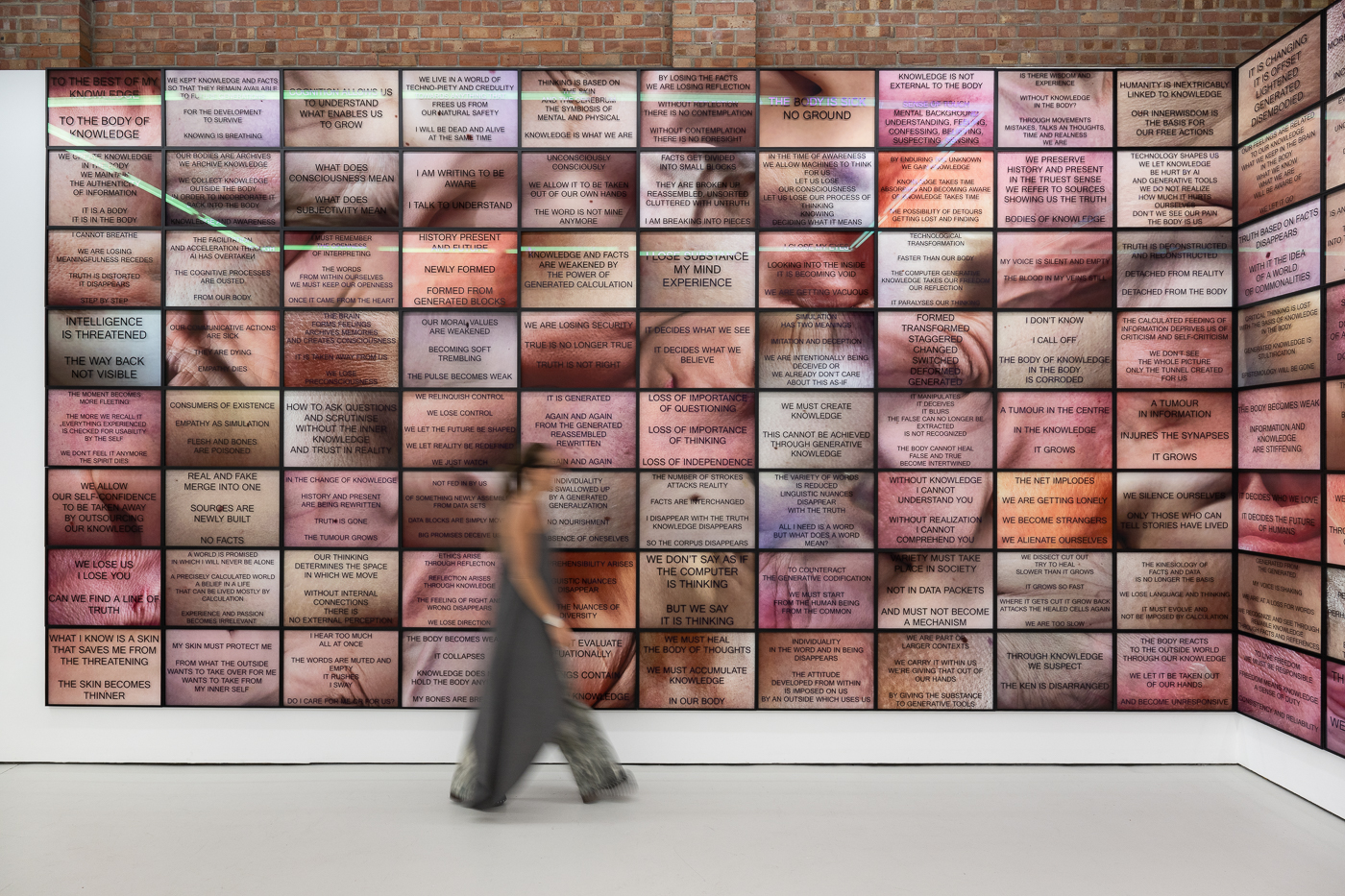

To understand an insider’s perspective, we got in touch with Gretchen Andrew, a LA-based search engine artist. When asked about the credibility of AI in art, Gretchen said that she always felt this question comes from an art world obsessed with the for-sale art object whereas I believe art practices to be more relevant than art output. She quoted Gombrich’s The Story of Art, “There really is no such thing as Art. There are only artists″ and added that she considers the search engine to be a co-author, search engine art is more a collection of practices and intentions than of art-object output.

“So then the relevant question becomes not who should get credit, but can an AI have an art practice.”

Gretchen Andrew

Giving too much human touch to AI comes with its own advantages and disadvantages. One obvious thing as mentioned above is the undermining of human role. This takes away ownership from the creators and accountability away from the trespassers.

In the story of Edmond de Belamy, wrote the researchers at MIT Media Lab, the first obstacle is knowing what the set of possibly relevant human stakeholders are and how they are relatively positioned within an AI system. AI is an umbrella term that corresponds to a web of human actors and computational processes interacting in complex ways.

Such lack of understanding can manifest, what the authors called, a Moral Crumple Zone, whereby disproportional outrage is channeled toward a peripheral person of an AI system as AI systems become further integrated into human decision-making, it is likely that they will be increasingly anthropomorphised. The researchers believe that understanding the psychological mechanics of this ‘‘absorption of responsibility’’ by the AI is important for the accountability and governance of AI systems going forward.

Link to the article →